Aim: To upgrade/migrate (side-side) SQL Server 2014 Availability group(s) running on Windows Server 2012 R2 to SQL Server 2019 running on Win 2016 with the least amount of downtime.

Couple of years ago, I wrote a blog post explaining how to upgrade Windows OS from 2012 R2 to 2016 on nodes participating in fail over clustering with minimal downtime using rolling upgrade technique. In this blog post I will be sharing something similar but throwing SQL Server availability groups into the mix. So let me briefly explain what we are trying to achieve here.

Current environment:

I’ve a two node Failover cluster (Windows Server 2012 R2) hosting SQL Serevr 2014 Always On Availability Group with synchronous commit mode. I have a listener configured for my applications to connect. These replicas are running on the latest build of SQL 2014 as of the date this post is published.

As you can see, W12SQL2016A/B are my two replicas(Nodes) which are running Win2012R2+SQL 2014.

Originally I thought of Installing SQL 2016(hence the host names), but ended up installing SQL 2014 for now based on our specific requirement. I didn’t want to change the host names as I had my windows Fail over cluster all setup by this time and I really don’t want to deal with fixing any annoying errors that might popup because of messing up my host names of my nodes. Anyways…the bottom line is I have SQL 2014 AG running on Win 2012R2 which needs to be upgraded/migrated to SQL 2019 running on Windows 2016.

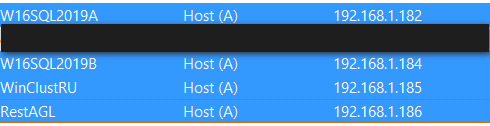

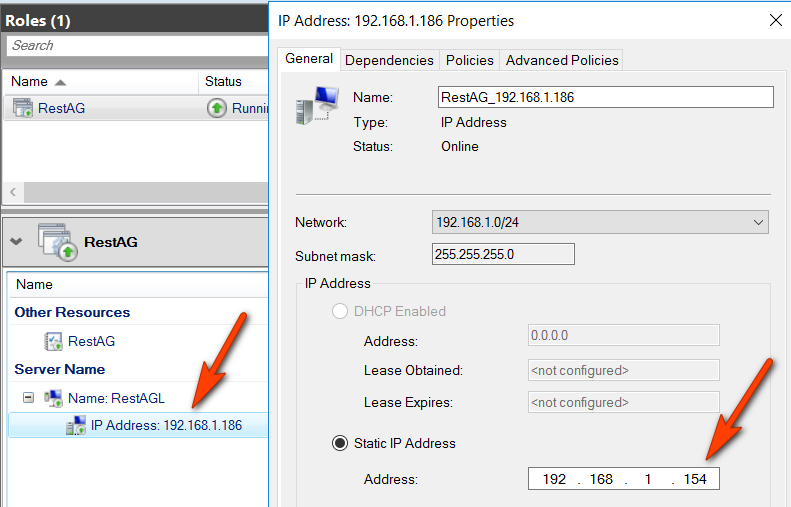

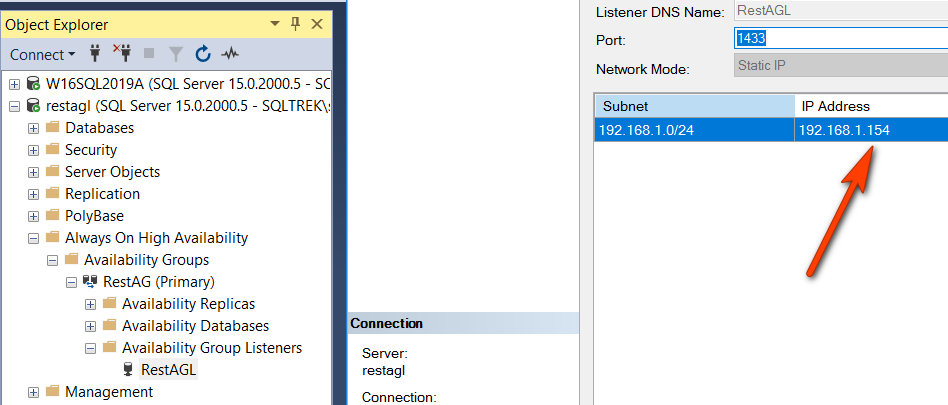

Listener Name: RestAGL

Goal:

To upgrade these SQL Instances to 2019 running on windows server 2016 with a very minimal downtime and no configuration changes for the App teams, assuming In-place upgrades are not allowed.

What’s the high level plan:

Take Full Backups.

- Add W16SQL2019A and W16SQL2019B nodes to the same windows cluster leveraging mixed mode.

- Install SQL 2019 and add these two nodes as replicas at SQL Server AOAG layer.

- Join the databases and let the magic happen.

- During the final cutover date/time, failover to SQL 2019 and remove the old replicas from AG.

- Evict both windows 2012R2 nodes from the cluster and raise the functionality level to 2016.

Now, let’s see this in action one step at a time.

Below is the screenshot of all my SQL Instances which I will be working on. To begin with I have two brand new SQL Server 2019 standalone Instances(W16SQL2019A and W16SQL2019B), on which I just enabled HADR feature.

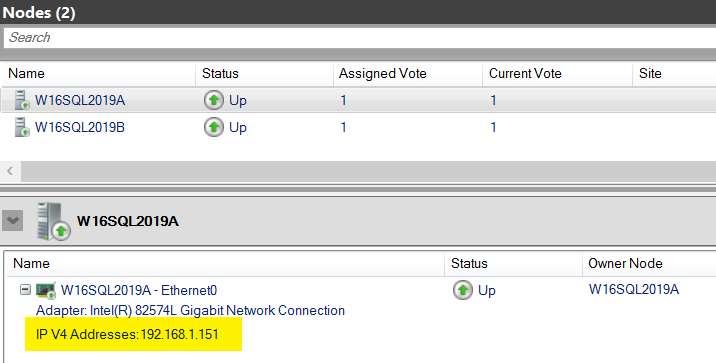

Let’s go, I added the new Win2019 nodes to the existing windows failover cluster which is running on 2012 R2 functionality level.

Note: You don’t want to run in mixed mode of WSFC for long periods. Microsoft might not support if you stay in mixed mode for more than 4 weeks. This is only to perform rolling upgrades to make your systems really highly available. Wrap up the entire process in a day or two and be done with it.

This is expected. For more details on this, hop on to the blog post that I provided in the beginning of this blog post.

Now it’s time to jump into SQL Server to add these servers as replicas into our AG.

Awesome, so far so good 🙂

Let’s move on….Connecting to one of the SQL 2019 instances, below is what I have. Oops!!

I had to join the individual databases manually to the AG from both the newly added SQL 2019 replicas. Once that is done, below is how my dashboard looked like.

Also, I changed the failover mode to manual just to make sure cluster has no control over failing over my AG. I want to have total control over how and when to failover my AG till the entire upgrade process is complete. Hey BTW, did you take Full Backups?

Did I mention, I have a table called “McD” in “American” database with one row in it? See below…

Now comes the fun part. Set one of the SQL 2019 Instances availability mode to Synchronous commit and perform a controlled manual failover. In my case, I selected W16SQL2019A on which I changed it to Synchronous mode and failed over my AG from W12SQL2016A(Which is my current primary) to W16SQL2019A .

Awesome, At this point, W16SQL2019A took over the primary role all your databases participating in your AG have been upgraded to SQL 2019 and the other SQL 2019(W16SQL2019B in my case) Instance will be in sync from now on, but the two SQL 2014 Instances will be in unhealthy state, In fact those databases become inaccessible at this time, since Logs can’t be shipped from higher(2019) to lower(2014) version. Duh!!!!!….

Perfectoo! Also, I have my table and data intact, double perfectoo!

If you are curious, this is how the error log looked like. You can clearly see, the internal database version is getting upgraded from SQL 2014 all the way to 2019.

Below is a screenshot showing what to expect on old SQL instances after failing over AG to newer version.

Time to do some clean up now. I removed both SQL 2014 Instances from AG as replicas and boom……PRESTO!

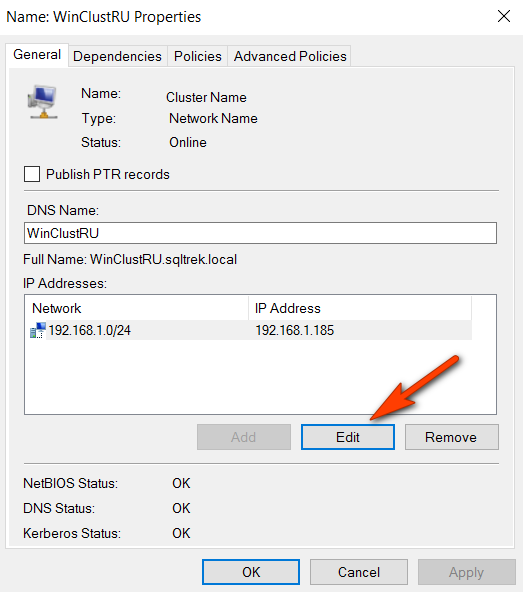

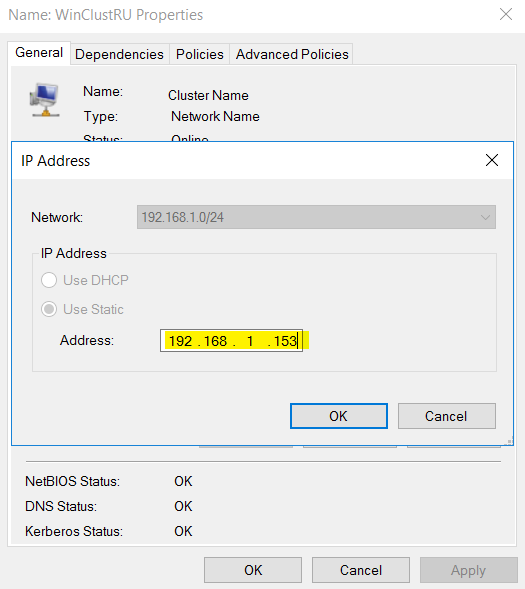

The only thing left now is to take care of WSFC by evicting old windows server 2012 R2 nodes and raise the functional level of the cluster to come out of mixed mode.

That’s it folks. Hope this is helpful, Cheers!